CHICAGO (AP) — Michael Williams’ wife pleaded with him to remember their fishing trips with the grandchildren, how he used to braid her hair, anything to jar him back to his world outside the concrete walls of Cook County Jail.

His three daily calls to her had become a lifeline, but when they dwindled to two, then one, then only a few a week, the 65-year-old Williams felt he couldn’t go on. He made plans to take his life with a stash of pills he had stockpiled in his dormitory.

Williams was jailed last August, accused of murdering a young man from the neighborhood who asked him for a ride during a night of unrest over police brutality in May. But the key evidence against Williams didn’t come from an eyewitness or an informant; it came from a clip of noiseless security video showing a car driving through an intersection, and a loud bang picked up by a network of surveillance microphones. Prosecutors said technology powered by a secret algorithm that analyzed noises detected by the sensors indicated Williams shot and killed the man.

“I kept trying to figure out, how can they get away with using the technology like that against me?” said Williams, speaking publicly for the first time about his ordeal. “That’s not fair.”

Williams sat behind bars for nearly a year before a judge dismissed the case against him last month at the request of prosecutors, who said they had insufficient evidence.

Williams’ experience highlights the real-world impacts of society’s growing reliance on algorithms to help make consequential decisions about many aspects of public life. Nowhere is this more apparent than in law enforcement, which has turned to technology companies like gunshot detection firm ShotSpotter to battle crime. ShotSpotter evidence has increasingly been admitted in court cases around the country, now totaling some 200. ShotSpotter’s website says it’s “a leader in precision policing technology solutions” that helps stop gun violence by using “sensors, algorithms and artificial intelligence” to classify 14 million sounds in its proprietary database as gunshots or something else.

But an Associated Press investigation, based on a review of thousands of internal documents, emails, presentations and confidential contracts, along with interviews with dozens of public defenders in communities where ShotSpotter has been deployed, has identified a number of serious flaws in using ShotSpotter as evidentiary support for prosecutors.

AP’s investigation found the system can miss live gunfire right under its microphones, or misclassify the sounds of fireworks or cars backfiring as gunshots. Forensic reports prepared by ShotSpotter’s employees have been used in court to improperly claim that a defendant shot at police, or provide questionable counts of the number of shots allegedly fired by defendants. Judges in a number of cases have thrown out the evidence.

ShotSpotter’s proprietary algorithms are the company’s primary selling point, and it frequently touts the technology in marketing materials as virtually foolproof. But the private company guards how its closed system works as a trade secret, a black box largely inscrutable to the public, jurors and police oversight boards.

The company’s methods for identifying gunshots aren’t always guided solely by the technology. ShotSpotter employees can, and often do, change the source of sounds picked up by its sensors after listening to audio recordings, introducing the possibility of human bias into the gunshot detection algorithm. Employees can and do and modify the location or number of shots fired at the request of police, according to court records. And in the past, city dispatchers or police themselves could also make some of these changes.

Amid a nationwide debate over racial bias in policing, privacy and civil rights advocates say ShotSpotter’s system and other algorithm-based technologies used to set everything from prison sentences to probation rules lack transparency and oversight and show why the criminal justice system shouldn’t outsource some of society’s weightiest decisions to computer code.

When pressed about potential errors from the company’s algorithm, ShotSpotter CEO Ralph Clark declined to discuss specifics about their use of artificial intelligence, saying it’s “not really relevant.”

“The point is anything that ultimately gets produced as a gunshot has to have eyes and ears on it,” said Clark in an interview. “Human eyes and ears, OK?”

——-

This story, supported by the Pulitzer Center for Crisis Reporting, is part of an ongoing Associated Press series, “Tracked,” that investigates the power and consequences of decisions driven by algorithms on people’s everyday lives.

——-

A GAME CHANGER

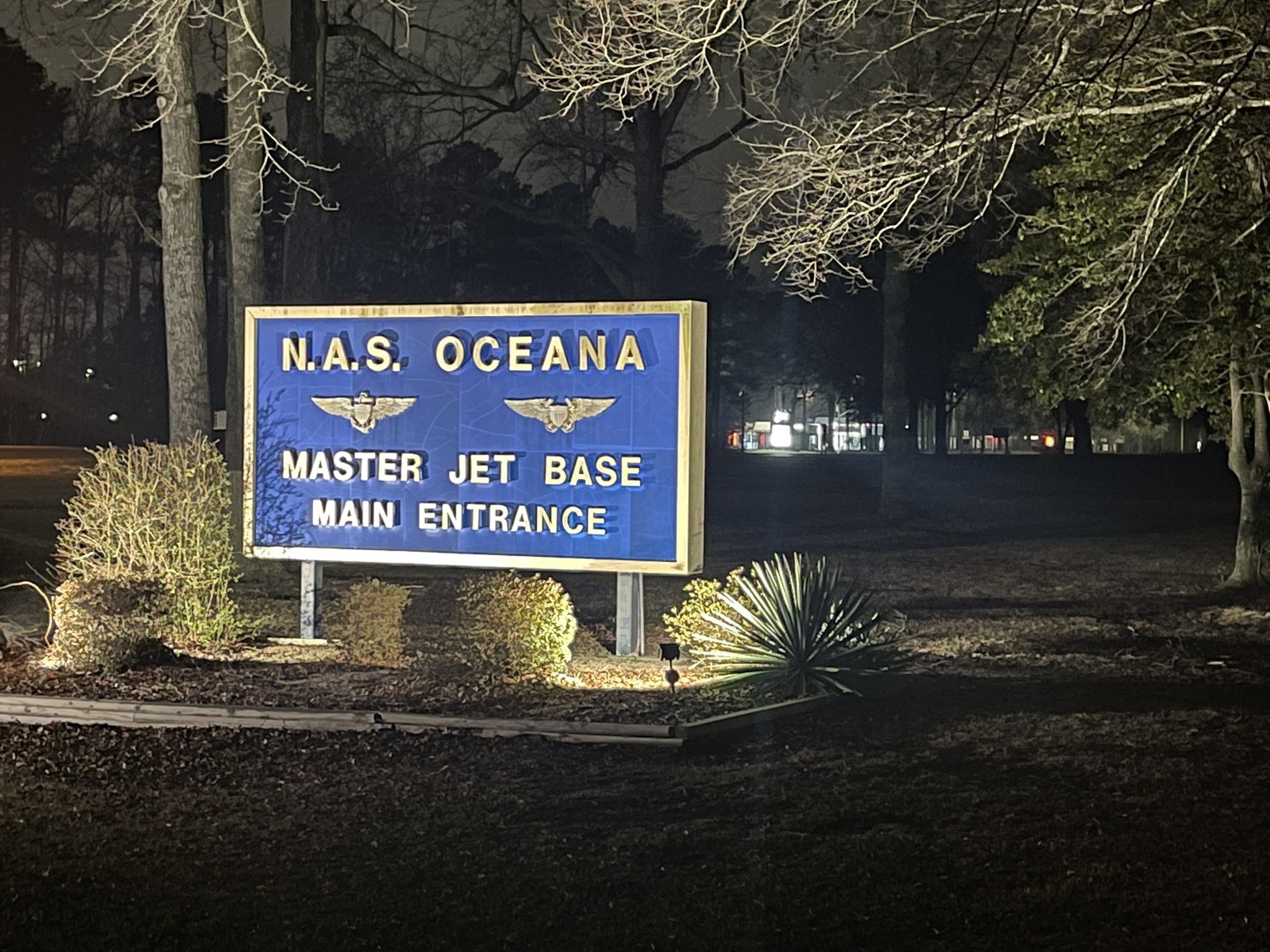

Police chiefs call ShotSpotter a game-changer. The technology, which has been installed in about 110 American cities, large and small, can cost up to $95,000 per square mile per year. The system is usually placed at the request of local officials in neighborhoods deemed to be the highest risk for gun violence, which are often disproportionately Black and Latino communities. Law enforcement officials say it helps get officers to crime scenes quicker and helps cash-strapped public safety agencies better deploy their resources.

“ShotSpotter has turned into one of the most important cogs in our wheel of addressing gun violence,” said Toledo, Ohio Police Chief George Kral during a 2019 International Association of Chiefs of Police conference in Chicago.

Researchers who took a look at ShotSpotter’s impacts in communities where it is used came to a different conclusion. One study published in April in the peer-reviewed Journal of Urban Health examined ShotSpotter in 68 large, metropolitan counties from 1999 to 2016, the largest review to date. It found that the technology didn’t reduce gun violence or increase community safety.

“The evidence that we’ve produced suggests that the technology does not reduce firearm violence in the long-term, and the implementation of the technology does not lead to increased murder or weapons related arrests,” said lead author Mitch Doucette.

ShotSpotter installs its acoustic sensors on buildings, telephone poles and street lights. Employees in a dark, restricted-access room study hundreds of thousands of gunfire alerts on multiple computer screens at the company’s headquarters about 35 miles south of San Francisco or a newer office in Washington.

Forensic tools like DNA and ballistics evidence used by prosecutors have had their methodologies examined in painstaking detail for decades, but ShotSpotter claims its software is proprietary, and won’t release its algorithm. The company’s privacy policy says sensor locations aren’t divulged to police departments, although community members can see them on their street lamps. The company has shielded internal data and records revealing the system’s inner workings, leaving defense attorneys no way of interrogating the technology to understand the specifics of how it works.

“We have a constitutional right to confront all witnesses and evidence against us, but in this case the ShotSpotter system is the accuser, and there is no way to determine if it’s accurate, monitored, calibrated or if someone’s added something,” said Katie Higgins, a defense attorney who has successfully fought ShotSpotter evidence. “The most serious consequence is being convicted of a crime you didn’t commit using this as evidence.”

The Silicon Valley startup launched 25 years ago backed by venture capitalist Gary Lauder, heir to Estée Lauder’s makeup fortune. Today, the billionaire remains the company’s largest investor.

ShotSpotter’s profile has grown in recent years.

The U.S. government has spent more than $6.9 million on gunshot detection systems, including ShotSpotter, in discretionary grants and earmarked funds, the Justice Department said in response to questions from AP. States and local governments have spent millions more, from a separate pool of federal tax dollars, to purchase the system.

The company’s share price has more than doubled since it went public in 2017 and it posted revenue of nearly $30 million in the first half of 2021. It’s hardly ubiquitous, however. ShotSpotter’s website lists 119 communities in the U.S., the Caribbean and South Africa where it operates. The company says it has deployed 18,000 sensors covering 810 square miles.

In 2018, it acquired a predictive policing company called HunchLab, which integrates its AI models with ShotSpotter’s gunshot detection data to purportedly predict crime before it happens.

That system can “forecast when and where crimes are likely to emerge and recommends specific patrols and tactics that can deter these events,” according to the company’s 2020 annual report filed with the Securities and Exchange Commission. The company said it plans to expand in Latin America and other regions of the world. It recently appointed Roberta Jacobson, the former U.S. Ambassador to Mexico, to its board.

Late last year, a Trump administration commission on law enforcement urged increased funding for systems like ShotSpotter to “combat firearm crime and violence.”

And amid rising homicides, this spring, the Biden administration nominated David Chipman, a former ShotSpotter executive, to head the Bureau of Alcohol, Tobacco, Firearms and Explosives.

In June, President Joe Biden encouraged mayors to use American Rescue Plan funds — aimed at speeding up the U.S. pandemic recovery — to buy gunshot detection systems, “to better see and stop gun violence in their communities.”

‘SOMETHING IN ME HAD JUST DIED’

On a balmy Sunday evening in May 2020, Williams and his wife Jacqueline Anderson settled in at their apartment building on Chicago’s South Side. They fed their Rottweiler Lily and German shepherd Shibey. Anderson fell asleep. Williams said he left the house to buy cigarettes at a local gas station.

Looters had beaten him to it. Six days before in Minneapolis, George Floyd had been killed by police officer Derek Chauvin. Four hundred miles away, in Williams’ neighborhood, outrage boiled over. Shops were torn up, store windows broken, fires burned.

Williams found the gas station destroyed, so he said he made a U-turn to head home on South Stony Island Avenue. Before he reached East 63rd Street, Williams said Safarian Herring, a 25-year-old he said he had seen around the neighborhood, waved him down for a ride.

“I didn’t feel threatened or anything because I’ve seen him before, around. So, I said yes. And he got in the front seat, and we took off,” Williams said.

According to documents AP obtained through an open records request, Williams told police that as he approached an intersection another vehicle pulled up beside his car. A man in the front passenger seat fired a shot. The bullet missed Williams but hit his passenger.

“It shocked me so badly, the only thing I can do was slump down in my car,” he said. As Herring bled all over the seat from wounds to the side of his head, Williams ran a red light to escape.

“I was hollering to my passenger ‘Are you ok?’” said Williams. “He didn’t respond.”

Williams drove his passenger to St. Bernard Hospital, where medical workers rushed Herring into the emergency room and doctors fought to save his life.

Two weeks before being picked up by Williams, Samona Nicholson, Herring’s mother, said the aspiring chef had survived a shooting at a bus stop. Nicholson, who called her son “Pook,” arranged for him to stay with a relative where she thought he’d be safe.

Doctors pronounced Herring dead on June 2, 2020, at 2:53 p.m.

For days after the shooting, Williams’ wife said he curled up on his bed, having flashbacks and praying for his passenger.

Three months after Herring’s death, the police showed up. Williams recalls officers told him they wanted to take him to the station to talk and assured him he did nothing wrong.

He had a criminal history and spent three different stints behind bars, for attempted murder, robbery and discharging a firearm, records show.

That was all when he was a younger man. Williams said he had moved on with life, avoiding legal trouble since his last release more than 15 years ago and working numerous jobs.

At the police station, detectives interrogated him about the night Herring was shot, then took him to a holding cell.

“They just said that they were charging me with first-degree murder,” Williams said. “When he told me that, it was just like something in me had just died.”

“IT’S NOT PERFECT”

On the night Williams stepped out for cigarettes, ShotSpotter sensors identified a loud noise the system initially assigned to 5700 S. Lake Shore Dr. near Chicago’s historic Museum of Science and Industry alongside Lake Michigan, according to an alert the company sent to police.

That material anchored the prosecutor’s theory that Williams shot Herring inside his car, even though the case supplementary report from police did not cite a motive nor did it mention any eyewitnesses. There was no gun found at the scene of the crime.

Prosecutors also leaned on a surveillance video showing that Williams’ car ran a red light, as did another car that appeared to have its windows up, ruling out the possibility that the shot came from the other car’s passenger window, they said.

Chicago police did not respond to AP’s request for comment. The Cook County State’s Attorney’s Office said in a statement that after careful review prosecutors “concluded that the totality of the evidence was insufficient to meet our burden of proof,” but did not answer specific questions about the case.

As ShotSpotter’s gunshot detection systems expand around the country, so has its use as forensic evidence in the courtroom — some 200 times in 20 states since 2010, with 91 of those cases in the past three years, the company said.

“Our data compiled with our expert analysis help prosecutors make convictions,” said a recent ShotSpotter press release. Even during the pandemic, ShotSpotter participated in 18 court cases, some over Zoom, according to a recent company presentation to investors.

But even as its use has expanded in court, ShotSpotter’s technology has drawn scrutiny.

For one, the algorithm that analyzes sounds to distinguish gunshots from other noises has never been peer reviewed by outside academics or experts.

“The concern about ShotSpotter being used as direct evidence is that there are simply no studies out there to establish the validity or the reliability of the technology. Nothing,” said Tania Brief, a staff attorney at The Innocence Project, a nonprofit which seeks to reverse wrongful convictions.

A 2011 study commissioned by the company found that dumpsters, trucks, motorcycles, helicopters, fireworks, construction, trash pick-up and church bells have all triggered false positive alerts, mistaking these sounds for gunshots. Clark said the company is constantly improving its audio classifications, but the system still logs a small percentage of false positives.

In the past, these false alerts — and lack of alerts — have prompted cities from Charlotte, North Carolina to San Antonio, Texas, to end their ShotSpotter contracts, the AP found.

In Fall River, Massachusetts, police said ShotSpotter worked less than 50% of the time and missed all seven shots in a downtown murder in 2018. The results didn’t improve over time, and later that year ShotSpotter turned off its system.

The public school district in Fresno, California ended its ShotSpotter contract last year, after paying $1.25 million over four years and finding it too costly. Also, parents and board members were concerned that district funds meant to help high-needs students were used to pay for ShotSpotter, said school board trustee Genoveva Islas.

“We were at the point where George Floyd had been murdered and there was a lot of push around racism and discrimination in the district. There was this mounting questioning about that investment in particular,” Islas said.

Some courts, too, have been less than impressed with the ShotSpotter system. In 2014, a judge in Richmond, California didn’t allow ShotSpotter evidence to be used during a gang murder conspiracy case, although the accused man, Todd Gillard, was still convicted of being involved in a drive-by shooting.

“The expert testimony that a gun was fired at a particular location at a given time, based on the ShotSpotter technology, is not presently admissible in court, because it has not, at this point, reached general acceptance in the relevant scientific community,” ruled Contra Costa Superior Court Judge John Kennedy.

In a Chicago case, prosecutors had surveillance videos of gang member Ernesto Godinez in a neighborhood where an ATF agent was shot after dark — but none showing him actually shooting a gun. At a 2019 trial, they entered ShotSpotter data to show gunshots originated from the location where video evidence indicated Godinez was when shots rang out. This month, a federal appeals court ruled that a trial judge erred by not vetting the reliability of ShotSpotter data before letting jurors hear it. Nonetheless, the split three-judge panel concluded that other evidence prosecutors presented was enough to uphold Godinez’s conviction.

ShotSpotter says it’s constantly fine-tuning its machine learning model to recognize what is and isn’t a gunshot sound by getting detectives and investigators to add crime scene observations to its system. As a part of that process, which they call “ground truth,” ShotSpotter asks patrol officers to add and notate shell casings, bullet holes, gather witness testimony and other “evidence of gunfire” using its software.

“We have the opportunity to make the machine classification better and better and better because we get real-world feedback loops from humans,” Clark said.

Several experts warned that training an algorithm based on a set of observations submitted by police risks contaminating the model if harried officers — perhaps inadvertently — feed it incomplete or incorrect data.

“I’m kind of aghast,” said Clare Garvie, a senior associate with the Center on Privacy & Technology at Georgetown Law. “You are building an inherent uncertainty into that system, and you are telling that system it’s fine. You are contaminating the reliability of your system.”

ShotSpotter said the more data it receives from police, the more accurate its model becomes. The company says their system is accurate 97% of the time.

“In the small number of cases where ShotSpotter is incorrect, providing feedback to the algorithm can improve accuracy,” the company said.

Beyond the ShotSpotter algorithm, other questions have been raised about how the company operates.

Court records show that in some cases, employees have changed sounds detected by the system to say that they are gunshots.

During 2016 testimony in a Rochester, New York officer-involved shooting trial, ShotSpotter’s engineer Paul Greene was pressed to explain why one of its employees reclassified sounds from a helicopter to a bullet. The reason? He said its customer, in this case the Rochester Police Department, told them to.

The defense attorney in that case was dumbfounded: “Is that something that occurs in the regular course of business at ShotSpotter?” he asked.

“Yes, it is. It happens all the time,” said Greene. “Typically, you know, we trust our law enforcement customers to be really upfront and honest with us.”

Testifying in a 2017 San Francisco murder trial, Greene gave similar testimony that an analyst had moved the location of its initial alert a block away, suddenly matching the scene of the crime.

“It’s not perfect. The dot on the map is simply a starting point,” he said.

In the Williams case, evidence in pre-trial hearings shows that ShotSpotter initially said the noise the sensor picked up was a firecracker, a classification the company’s algorithm made with 98% confidence. But a ShotSpotter employee relabeled the noise as a gunshot.

Later, ShotSpotter senior technical support engineer Walter Collier changed the reported Chicago address of the sound to the street where Williams was driving, about 1 mile away, according to court documents. ShotSpotter said Collier corrected the report to match the actual location that the sensors had identified.

Collier worked for the Chicago Police Department for more than two decades before joining ShotSpotter, according to his LinkedIn profile. After Williams was sent to jail, his attorney requested more information about Collier’s training. The attorney, Brendan Max, said he was shocked by the company’s response.

In court filings, ShotSpotter acknowledged: “Our experts are trained using a variety of ‘on the job’ training sessions, and transfer of knowledge from our scientists and other experienced employees. As such no official or formal training materials exist for our forensic experts.”

Law enforcement officials in Chicago continue to stand by their use of ShotSpotter. Chicago’s three-year, $33 million contract, signed in 2018, makes the city ShotSpotter’s largest customer. ShotSpotter has been at the heart of the police department’s “intelligence-action cycle” for predictive policing that uses gunshot alerts to “identify areas of risk,” according to a presentation obtained by AP.

Late last month, on July 22, Attorney General Merrick Garland flew to Chicago to announce a new initiative to combat gun violence and toured a police precinct, looking on as officials showed him how they use ShotSpotter.

INSUFFICIENT EVIDENCE

The next day, Williams hobbled into Courtroom 500 leaning on his wooden cane, dressed in tan jail garb and sandals, as a sheriff’s deputy towered over him. He had been locked up for 11 months.

Williams lifted his head to the famously irascible Judge Vincent Gaughan. The 79-year-old Vietnam veteran looked back from high on his bench and told Williams his case was dismissed. The reason: insufficient evidence.

ShotSpotter maintains it had warned prosecutors not to rely on its technology to detect gunshots fired inside vehicles or buildings. The company said the disclaimer can be found in the small print embedded in its contract with Chicago police.

But the company declined to say at what point during Williams’ nearly year-long incarceration it got in touch with prosecutors, or why it prepared a forensic report for a gunshot that allegedly was fired in Williams’ vehicle, given the fact that the system had trouble identifying gunshots in enclosed spaces. The report itself contained contradictory information suggesting the technology did, in fact, work inside cars. Clark, the company’s CEO, declined to comment on the case, but in a follow-up statement, the company equivocated, telling AP that under “certain conditions,” the system can actually pick up gunshots inside vehicles.

Max, Williams’ attorney, said prosecutors never disclosed any of this information to him, and instead dropped charges two months after he subpoenaed ShotSpotter for the company’s correspondence with state’s attorneys.

The judge agreed to schedule a hearing in the coming weeks about whether to release ShotSpotter’s operating protocol and other documents the company wants to keep secret. Max, who requested it, said such material could be used to cast doubt on the validity and reliability of ShotSpotter evidence in cases nationwide.

At 9 p.m. on July 22, Williams walked out of Cook County Jail into the hot Chicago night. He had no cellphone, no wallet, no ID. Williams said authorities hadn’t let him make a phone call or returned anything to him. He was picked up by his attorney.

Anderson, his wife of 20 years, was waiting at home. When her husband stepped out of his attorney’s car, she took him in her arms and cried.

That first night at home, Anderson made ribs and chicken, cornbread and macaroni and cheese.

But Williams couldn’t eat on his own. He’d beat COVID-19 twice while in jail, but had developed an uncontrollable tremor in his hand that kept him from holding a spoon. So Anderson fed him. And as they sat together on the couch, she held onto his arm to try and stop the shaking.

For her part, Herring’s mother believes police had the right suspect in Williams. She blames ShotSpotter for botching the case by passing on, then withdrawing what she called flimsy data.

Williams remains shaken by his ordeal. He said he doesn’t feel safe in his hometown anymore. When he walks through the neighborhood he scans for the little microphones that almost sent him to prison for life.

“The only places these devices are installed are in poor Black communities, nowhere else,” he said. “How many of us will end up in this same situation?”